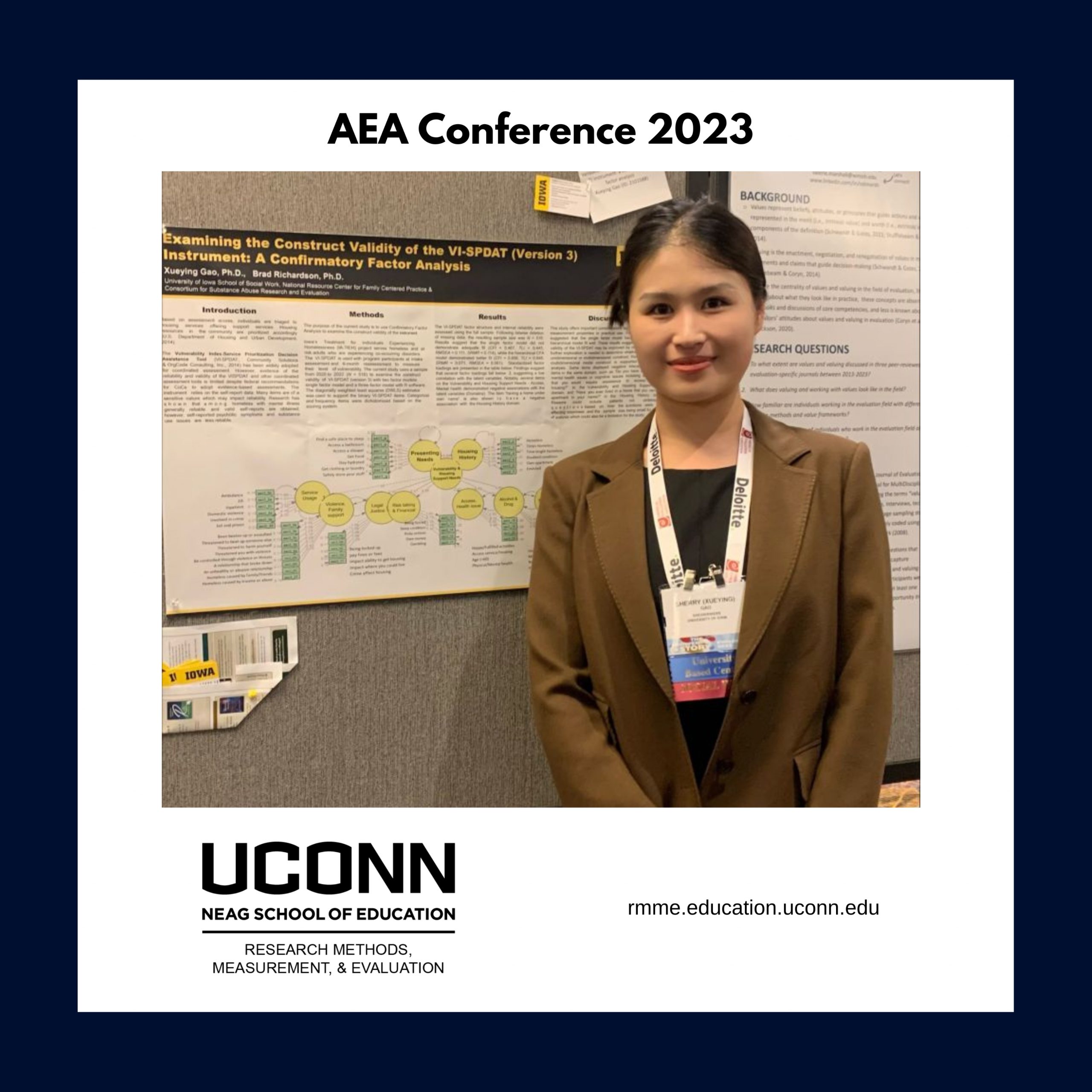

RMME Master’s alumna, Xueying Gao, presents her poster, “Validation of the VI-SPDAT (version 3) instrument: a confirmatory factor analysis” at the 2023 AEA conference. Congratulations on this fantastic poster presentation, from the Research Methods, Measurement, & Evaluation Community!

Authors: Xueying Gao and Brad Richardson

Presenter: Xueying Gao

Abstract: The Vulnerability Index-Service Prioritization Decision Assistance Tool (VI-SPDAT version 3; Community Solutions & OrgCode Consulting, Inc., 2020) is the primary assessment tool to scale individuals’ vulnerability (or self-sufficiency). The Iowa’s Treatment for Individuals Experiencing Homelessness (IA-TIEH) project was conducted to advance an informed, integrated program for homeless and at-risk adults who experience co-occurring disorders, in which VI-SPDAT was applied to the participants at intake assessment and 6-month reassessment to measure their level of vulnerability. The current study adopted the sample from 2020 to 2023 (N=539) to examine the construct validity of VI-SPDAT (version 3) with two factor models: single factor model and a three-factor model. Results suggested that the single factor model did not demonstrate adequate fit (CFI = 0.467, TLI = 0.445, RMSEA = 0.111, SRMR = 0.114), while the hierarchical CFA model demonstrated better fit (CFI = 0.856, TLI = 0.848, SRMR = 0.071, RMSEA = 0.061), suggesting its limitation in measuring individuals’ vulnerability and other outcome parameters in research and clinical practice. Some items were not associated with the global factor or sub-factor. The VI-SPDAT has substantial weaknesses in its theoretical alignment, item performance, and psychometric properties. We recommend the enhancement of a new multidimensional scale of vulnerability with a rigorous measurement development protocol.